APBench and benchmarking large language model performance in fundamental astrodynamics problems for space engineering

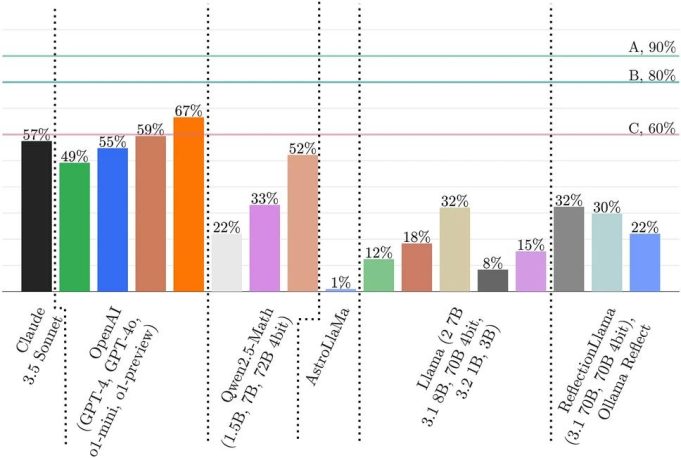

Researchers in ARCLab benchmarked state-of-the-art large language models on a set of fundamental space engineering questions and laid the groundwork for a promising GenAI-era for the future of space engineering.

Authors: Di Wu, Raymond Zhang, Enrico M. Zucchelli, Yongchao Chen, and Richard Linares

Citation: Nature Scientific Reports, March 7 2025

Abstract:

The problem-solving abilities of Large Language Models (LLMs) have become a major focus of research across various STEM fields, including mathematics and physics. Substantial progress has been made in both measuring and enhancing these abilities. Among the multitude of ways to advance space engineering research, one promising direction is the application of LLMs and foundational models in aiding in solving PhDlevel research problems. To understand the full potential of LLMs in astrodynamics, we have developed the first Astrodynamics Problems Benchmark (APBench) to evaluate the capabilities of LLMs in this field. We crafted for the first time a collection of questions and answers that range a wide variety of subfields in astrodynamics, astronautics, and space engineering. On top of this first dataset built for space engineering, we evaluate the performance of foundational models, both open source models and closed ones, to validate their current capabilities in space engineering, and paving the road for further advancements towards a definition of intelligence for space.