Certified Learning for Control

In recent years, deep learning has been widely applied to problems in robotics and control, but the safety and stability of these controllers remains hard-to-determine. We develop tools to improve the safety of learned controllers and generate data-driven proofs (certificates) of the correctness of these controllers.

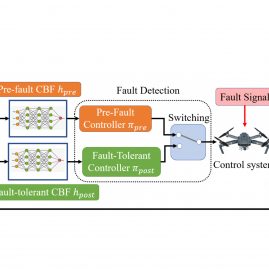

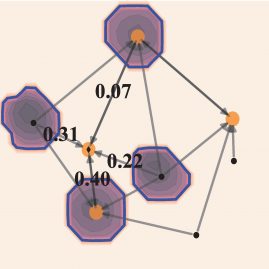

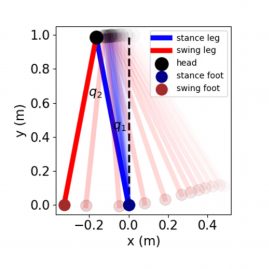

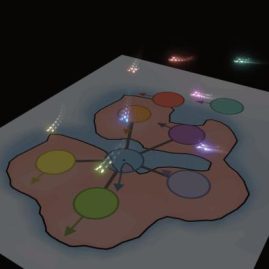

Our research in this area covers three main types of control certificates: Lyapunov functions to prove stability, barrier functions to prove collision avoidance and set invariance, and contraction metrics to prove stabilizability. We have a survey paper introducing learned control certificates in more detail.

Read our survey (to appear in IEEE Transactions on Robotics) on learned certificates.

We’re particularly interested in a couple of open research questions in this area:

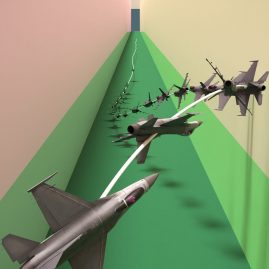

- How can we learn certificates that scale to large autonomous systems (e.g. multi-agent systems, networked systems, and decentralized settings)?

- How much knowledge do we need of the underlying model in order to construct a certificate?

- Can we certify controllers that operate on high-dimensional feedback (e.g. image-based controllers)?

- How can we make certificates robust to uncertainties in the environment, actuator failures, or adversarial attacks?

We’ve started to answer some of these questions in our research, and you can find a list of some of our relevant papers below.