RSS 2025 Paper Announcements

We are excited to announce that REALM will be presenting two papers at RSS 2025! Below you can find a brief description and dedicated project website for each paper.

Solving Multi-Agent Safe Optimal Control with Distributed Epigraph Form MARL

Songyuan Zhang*1, Oswin So*1, Mitchell Black2, Zachary Serlin2, Chuchu Fan1

1Massachusetts Institute of Technology 2MIT Lincoln Laboratory *Equal contribution

Tasks for multi-robot systems often require the robots to collaborate and complete a team goal while maintaining safety. This problem is usually formalized as a constrained Markov decision process (CMDP), which targets minimizing a global cost and bringing the mean of constraint violation below a user-defined threshold. Inspired by real-world robotic applications, we define safety as zero constraint violation. While many safe multi-agent reinforcement learning (MARL) algorithms have been proposed to solve CMDPs, these algorithms suffer from unstable training in this setting. To tackle this, we use the epigraph form for constrained optimization to improve training stability and prove that the centralized epigraph form problem can be solved in a distributed fashion by each agent. This results in a novel centralized training distributed execution MARL algorithm named Def-MARL. Simulation experiments on 8 different tasks across 2 different simulators show that Def-MARL achieves the best overall performance, satisfies safety constraints, and maintains stable training. Real-world hardware experiments on Crazyflie quadcopters demonstrate the ability of Def-MARL to safely coordinate agents to complete complex collaborative tasks compared to other methods.

Project Website: https://mit-realm.github.io/def-marl/

- Drawing on prior work that addresses the training instability of Lagrangian methods in the zero constraint violation setting, we extend the epigraph form method from single-agent RL to MARL, improving upon the training instability of existing MARL algorithms.

- Without any hyperparameter tuning, Def-MARL achieves stable training and is as safe as the most conservative baseline while simultaneously being as performant as the most

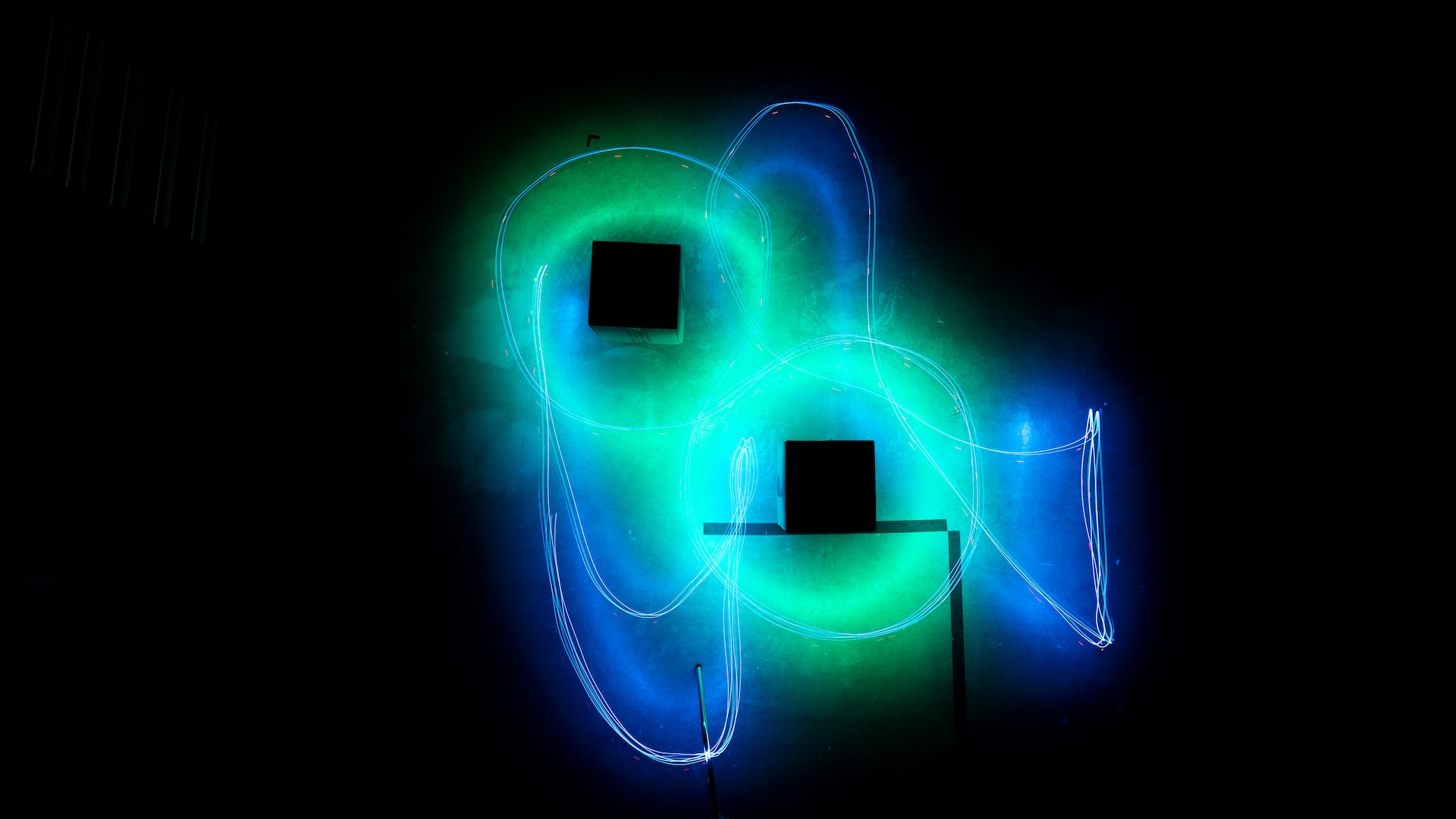

aggressive baseline across all environments. - We demonstrate on Crazyflie drones in hardware that Def-MARL can safely coordinate agents to complete complex collaborative tasks. Def-MARL performs the task better than centralized/decentralized MPC methods and does not get stuck in suboptimal local minima or exhibit unsafe behaviors.

Safe Beyond the Horizon: Efficient Sampling-based MPC with Neural Control Barrier Functions

Ji Yin*1, Oswin So*2, Eric Yu2, Chuchu Fan2, Panagiotis Tsiotras1

Georgia Institute of Technology 2Massachusetts Institute of Technology *Equal contribution

A common problem when using model predictive control (MPC) in practice is the satisfaction of safety specifications beyond the prediction horizon. While theoretical works have shown that safety can be guaranteed by enforcing a suitable terminal set constraint or a sufficiently long prediction horizon, these techniques are difficult to apply and thus are rarely used by practitioners, especially in the case of general nonlinear dynamics. To solve this problem, we impose a tradeoff between exact recursive feasibility, computational tractability, and applicability to ”black-box” dynamics by learning an approximate discrete-time control barrier function and incorporating it into a variational inference MPC (VIMPC), a sampling-based MPC paradigm. To handle the resulting state constraints, we further propose a new sampling strategy that greatly reduces the variance of the estimated optimal control, improving the sample efficiency, and enabling real-time planning on a CPU. The resulting Neural Shield-VIMPC (NS-VIMPC) controller yields substantial safety improvements compared to existing sampling-based MPC controllers, even under badly designed cost functions. We validate our approach in both simulation and real-world hardware experiments.

Project Website: https://mit-realm.github.io/ns-vimpc/

- Extend policy neural CBF (PNCBF) to the discrete-time case and propose a novel approach to train a discrete-time PNCBF (DPNCBF) using policy evaluation.

- Propose Resampling-Based Rollout (RBR), a novel sampling strategy for handling state constraints in VIMPC inspired by particle filtering, which significantly improves

the sampling efficiency by lowering the variance of the estimated optimal control. - Simulation results on two benchmark tasks show the efficacy of NS-VIMPC compared to existing sampling-based MPC controllers in terms of safety and sample efficiency.

- Hardware experiments on AutoRally, a 1/5 scale autonomous driving platform, demonstrate the robustness of NS-VIMPC to unmodeled dynamical disturbances under adversarially tuned cost functions.